[Note: the Jitter processing I use in this work was made by 42%noir. They offer tree.maxpat, and encourage anyone to use this patch]

How is the relationship forming between sound and image?

My process exists within a range of creative action stemming from an infinite set of ‘technical, conceptual, and aesthetic possibilities for using sound and image’ [1]. The Max and Jitter environments help to define an artistic context for shaping that relationship in my work. However, my purpose in making Repetition-studies is not to further define various audiovisual practices. As such, I have recently drawn from an issue Pheobe Sengers describes as critical making. Although her work is rooted in cultural studies of technology, I find her opinions on critical technical practice valuable when thinking about Repetition-studies. She mentions the following regarding this approach to design:

‘Critical technical practice isn’t just about one individual person building something technically and then thinking critically about it … it is also about how ways of technology building bring in particular assumptions about the way that the world is and being able to question those assumptions in order to open up new spaces for making and new spaces for thinking about technology and people.’ [2]

Here, Sengers hopes technologists take responsibility when contributing to the development of society; not just for the sake of introducing more ‘hi-tech’ into the world. Her attitude on critical making is also about moving away from institutional intervention, where engineering is considered more of a professional discipline. Therefore, the focus can be on the so-called amateur, and the tools they use for creating. For me, this attitude directly relates to constructing Repetition-studies – a process of questioning assumptions about audio-visual interaction.

Some examples

I have observed individual moments of interaction where particular aspects of sound and image result in a (subjectively) satisfying blend. A given audiovisual interaction can also demonstrate other behaviours such as a subtle range of accompaniment and juxtaposition.

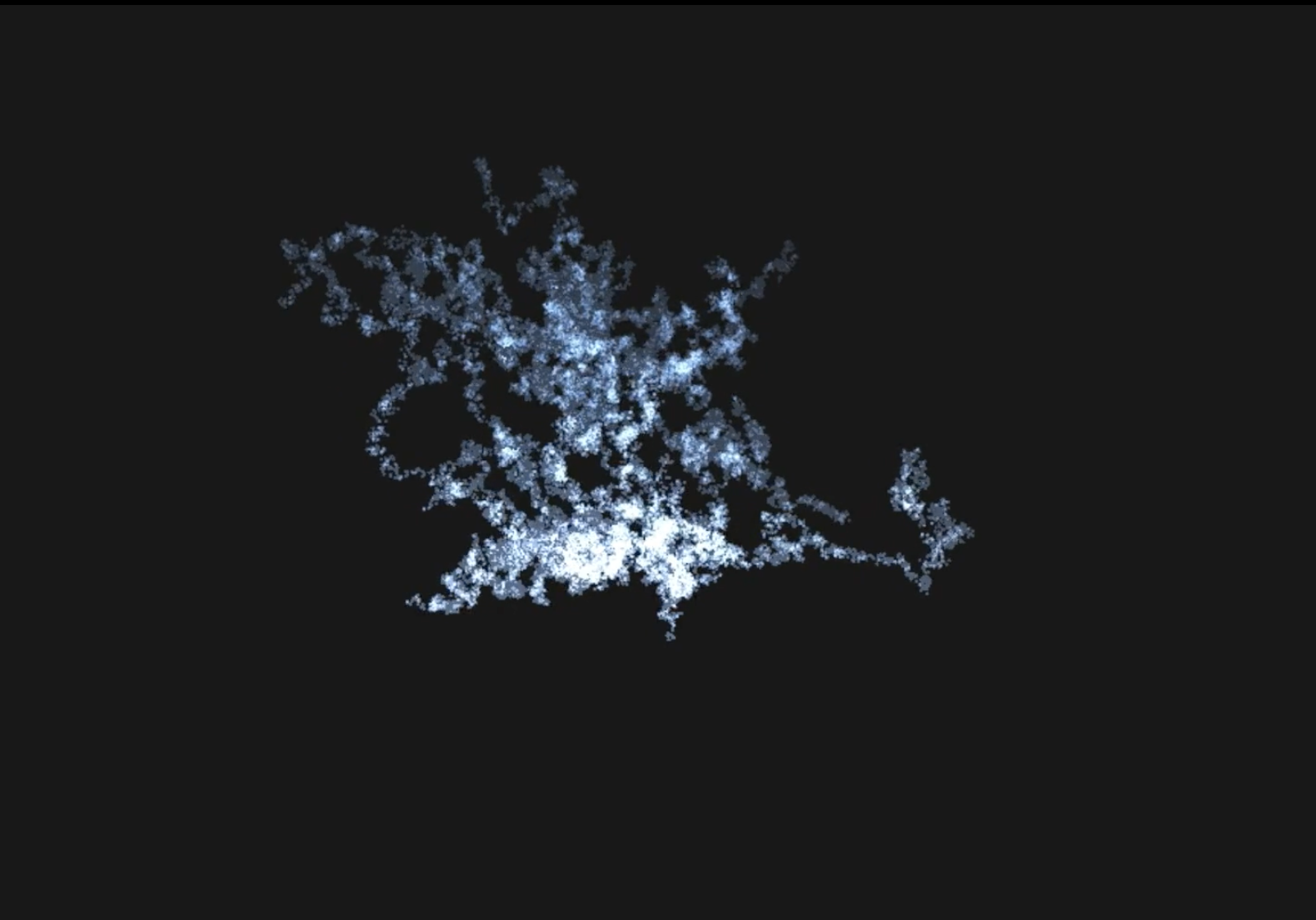

Repetition-studies 1 through 21 can be characterised as introductory studies, a range of short pieces containing aspects of comfortable engagement between sound and image-processing. For example, within the short duration of Repetition-study 21, the sound complements the moving image. Here, a repeating audio fragment is subtly altered by progressively applying granulation and reverb. This action highlights the contrast between density and overall formal depth as the shape in focus moves. In Repetition-study 19, the growth patterns of the tree become juxtaposed over the decaying sounds. A different example can be viewed in Repetition-study 18, alluding to a more interdependent relationship between the sound and image. Although there are three primary sonic layers in this very short study, the tree’s composition appears to interlock directly with the soundscape. Repetition-study 12 demonstrates interdependency as well.

I did not think Repetition-study 17 ‘worked’ initially; both sonic and visual development occurred much more gradually, compared to any of the other introductory studies—there seemed to be nothing linking the elements together. Repetition-study 15 demonstrates a similar interaction.

Another observed behaviour could be characterised as contrapuntal, where either sound or image elements answers the other. Repetition-study 16 demonstrates this interaction; after six seconds of the study, a sound event begins. Afterward, it is countered by the visuals at the 12-second mark. Repetition-study 11 demonstrates less interdependency, and could be characterised as an accompaniment. Here, the sound seems fixed, and demonstrates a lead role.

Revisiting the patch

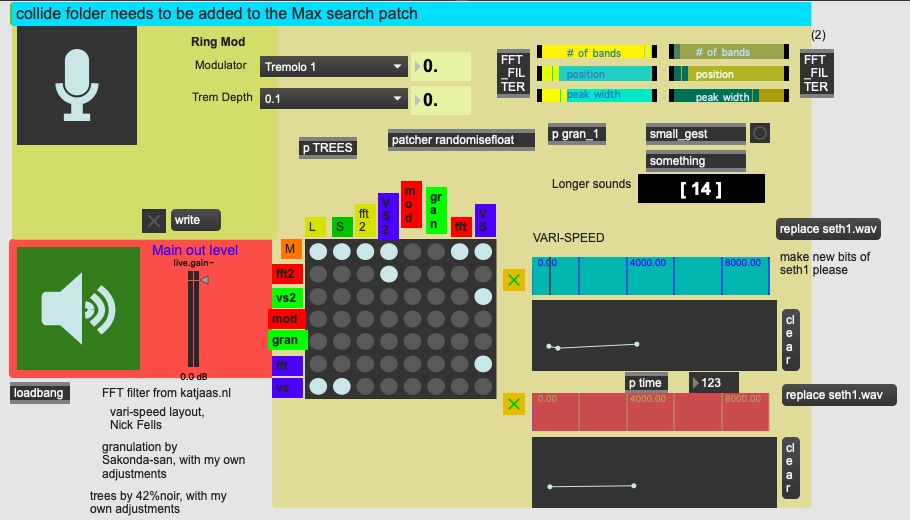

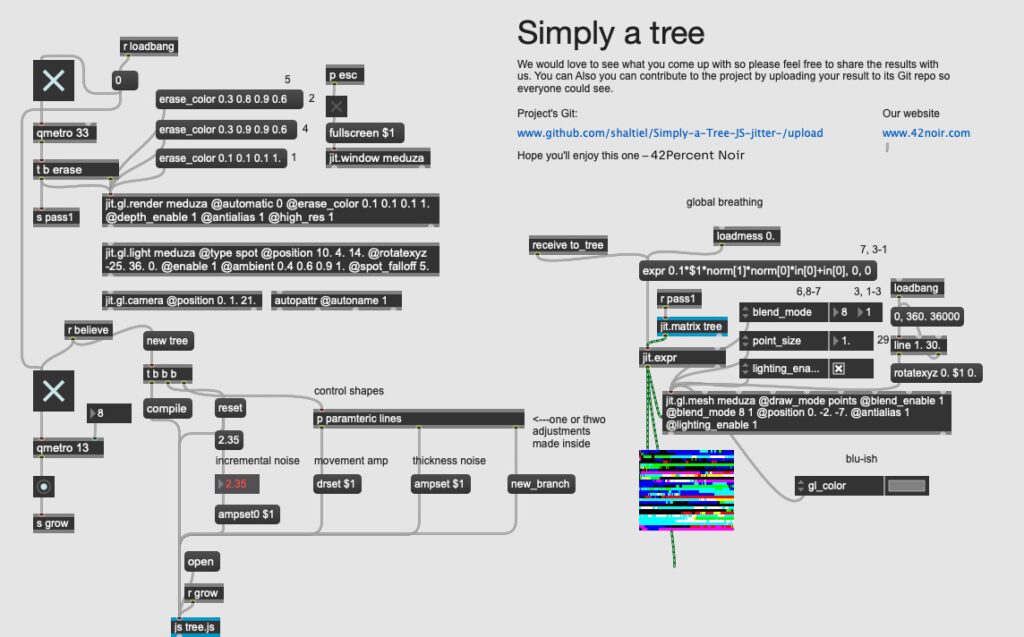

At the end of January, I was inclined to change the patch (Fig. 1), experimenting with more minimal sound streams. As shown below, I have changed the granulation tool and removed the reverb. I have added ring modulation, one FFT filter, another vari-speed player, as well as a different sample player.

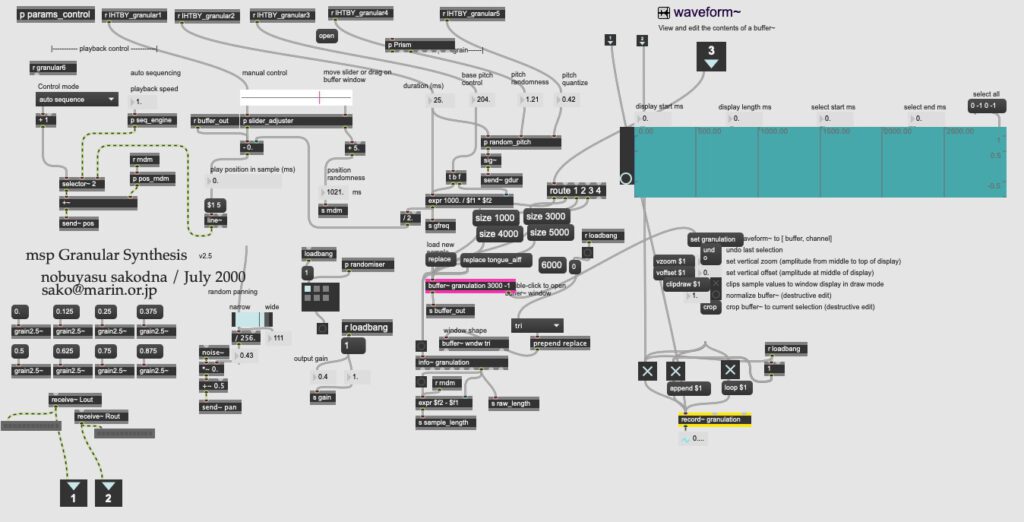

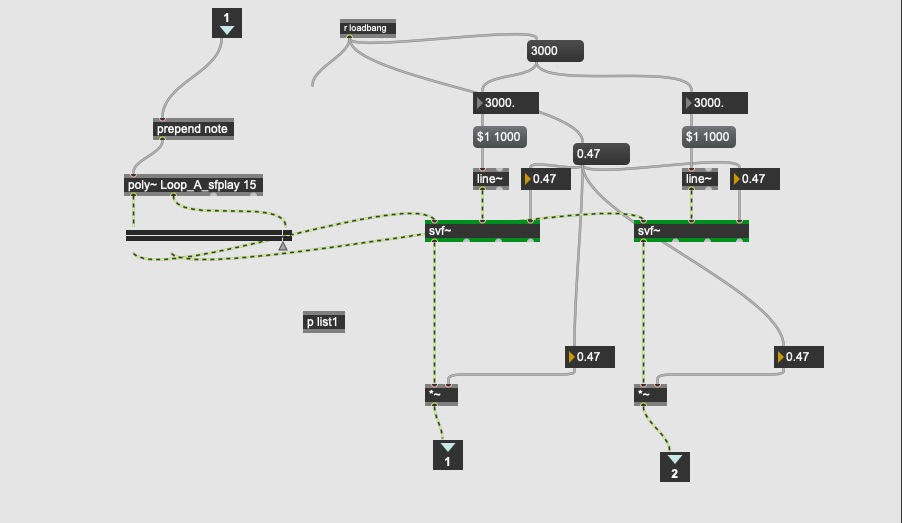

In Fig. 2, we can see the granulation subpatch, which has been labelled gran_1 at the top level of collide.maxpat. The new sample player has been placed in a sub-patch labelled small_gest (Fig. 3); the trigger button can be seen to the right of the sub-patch, which also includes the sample list1.

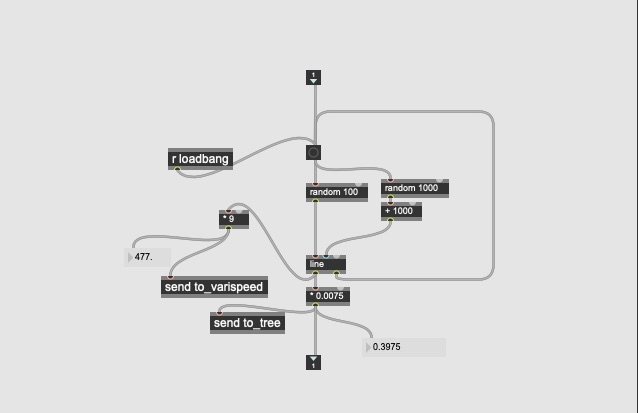

The output of the sample player has been treated with svf~ filters, changing the surface of the sound. The ring modulation, seen just to the right of the ezdac~, has the modulation frequency and modulation depth for use; also seen at the top level (Fig.1). Another feature of this patch, is the use of a random number generator subpatch named randomisefloat (Fig. 4). The output of this process is sent to the tree.maxpat (Fig. 5), with the send object labelled to_tree. A send object is also used to transmit the random output, labelled to_varispeed.

These random number values are used in two ways. Here, they are either sent to a jit.expr object, supplying data used to draw a geometric surface, or used as parameters directly affecting the shape of the tree as it grows.

New results

Relating to the adjustments made to the patch, in Repetition-study 22.1 one can hear a sonic layer veering off intermittently from another accompanying soundstream. This interaction mirrors the visuals. In contrast, the image-processing in Repetition-study 22.2 is accompanied by grainy sonic clusters. The textures in both components are tightly linked together. Here, the granulation used is quite serious due to it’s potential for heavy noise. These sounds are then carefully processed further; 22.3 demonstrates similar sound design, while adding another counter-layer made from another repeating audio fragment which has also been processed. Already in these three studies mentioned, the listener may hear a slightly more complex sonic mixture. This may be due to the potential for pitting contrasting fragments against one another – all stemming from the use of the two vari-speed player outputs, alongside their processed counterparts.

In Repetition-study 23.1 and 23.2, I experimented with using a recording made from past subway travel in Sao Paulo. Segments of this source material were distributed throughout the various buffers in the patch, then processed further. In these recordings, I found heavier metallic material contrasted with speech fragments. These studies could be a potential starting point for future audiovisual work which integrates environmental sound.

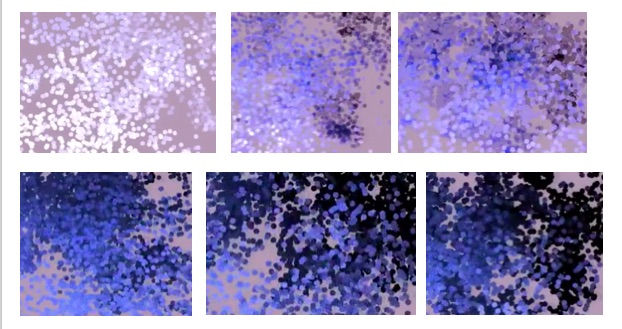

A distinctive feature in other studies could be characterised as an expression of randomly distributed points without the visible ‘tree’ skeleton. For example, studies 23.2 through 23.6 seem to outwardly explode while moving through a sequence of colour variations. Often, the point size has been slightly enlarged while experimenting with blend mode behaviours in Jitter [3]. In Repetition-study 23.3, one notices transformations of colour where, as points accumulate, a subtle range of colour emerges. These transformations allude to a digital painting technique (Fig. 6).

Some shapes to come

I am already experimenting with other ways of organising sound fragments in Max. Compared to the current approach seen in my patch, I intend for the sound component in the next group of Repetition-studies to draw from a much wider range of samples. At the moment, my patch can be viewed as clusters of processing which recycle materials. One approach could be simply to program Max to perform samples, as if improvising seemingly random patterns. Those samples could then be processed further using an algorithm closely linked to the configuration used for mediating the initial improvisation.

Seth Rozanoff (1977, US) is an electronic musician living in Holland. Rozanoff enjoys using interactive multi-media software programs for his compositions, and when performing. Much of his recent work has been either audiovisual, fixed soundscapes, or instrument-plus-electronics.