[Note: the Jitter processing I use in this work was made by 42%noir. They offer tree.maxpat, and encourage anyone to use this patch]

Introduction

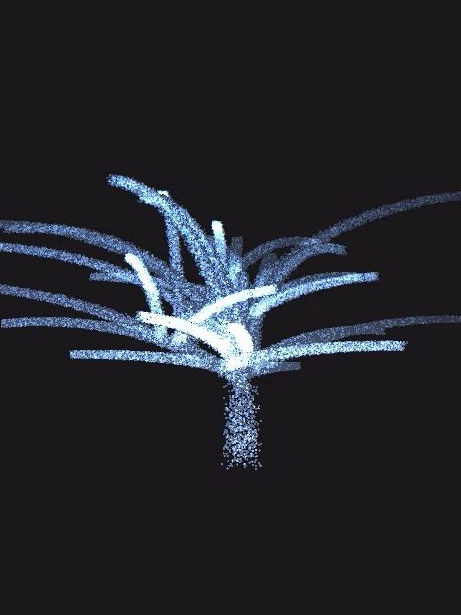

Repetition-studies provides a window into my approach to managing a series of fixed pieces, bringing together repetitive generative visual processing and custom sound design. The image(s) are set up to improvise separately from the sound, meaning a one-to-one causal connection does not have to be used to guide the formation of my work. However, I plan to ‘play’ with that interaction throughout my series. As a starting point, I use image processing to generate fine-grained tree compositions—an improvisation strategy captured in real time. We can view this work as a process of arranging fixed and indeterminate material, able to form an open range of audiovisual mixtures.

Background

My recent practice draws on aesthetic approaches found in the electronic music studio; compositional strategies which mix live and pre-recorded sound sources. Sometimes those concepts are further enhanced due to the use of a score, requiring players to learn how to listen to many layers of sound activity during performance/production. Ultimately, the transformation of musical material within these scenarios results in musical dialogue and the subtle layering of timbre, rhythm, layers of filtering, and effects. These materials can be viewed as the results of performing a range of alterations in the digitally processed routines of the laptop performer, the studio, or the actions of an instrumentalist.

Let the sounds play . . .

For me, using Jitter within my Max patches has led to other approaches to developing dialogue; shortly after completing my PhD, I began exploring audiovisuality in much of my new work. I have also since worked with other media artists and used Jitter tools by other developers. One ongoing work in particular, Repetitions (2018), has led to other series such as Geometries and more recently, Repetition-studies.

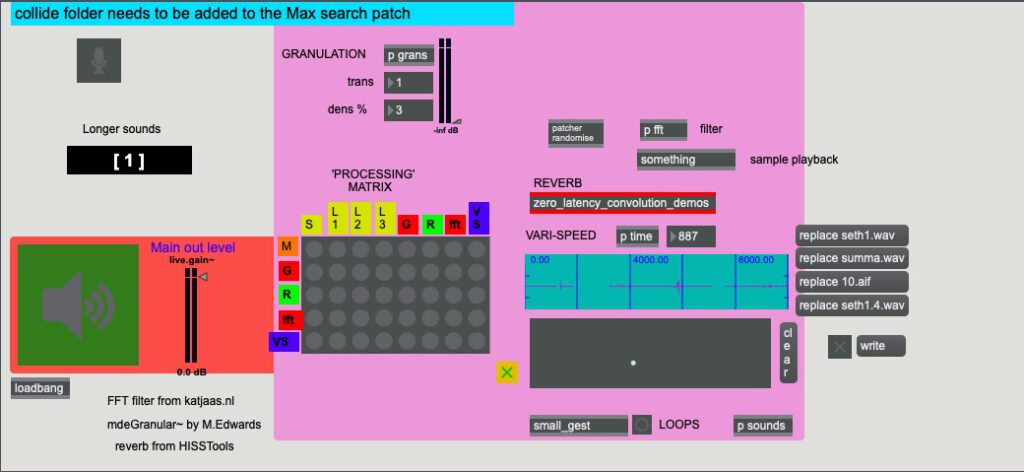

Repetition-studies (2020), began as a way to experiment with minimal soundstreams. For example, Repetition-study II demonstrates how grainy cloud-like patterning appears to interlock with elements of the present sound. I was not particularly concerned with building a specific relationship between sound and image. Initially, my attitude was simply to see whether interesting combinations between sound and image could be made. Ultimately, I arrived at using delicate and/or minimal soundstreams to enhance the images generated in Jitter. I then organized a Max patch to perform with a range of those types of sounds (Fig. 1). After glancing at this patch, one may notice there are not many types of processing or playback. Instead, there is potential for building other new combinations; the processing matrix helps with these subtle additions.

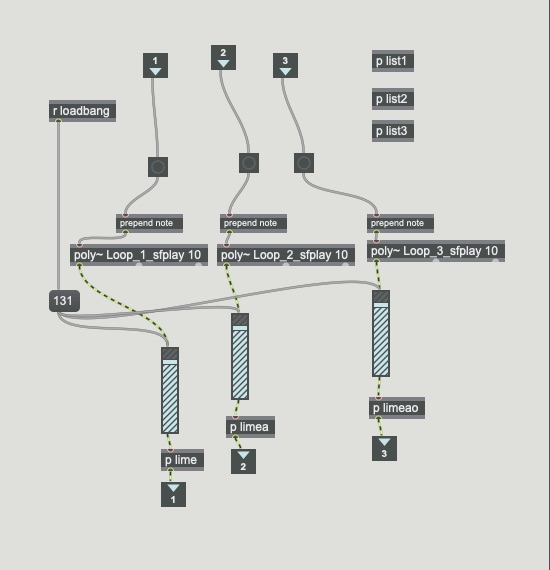

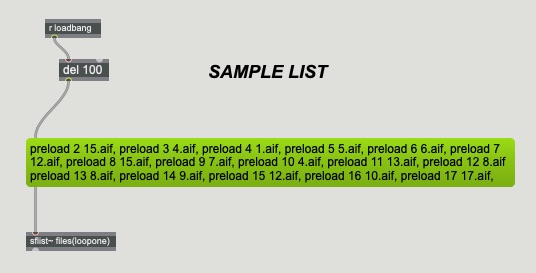

In Repetition-study II, and characteristic of my design in newcollide.maxpat, I include granulation and spectral processing. This patch also includes many sound banks, designed to play a randomly selected file at a random speed; those sounds can then be routed to granulation, reverb, an fft-filter, or the vari-speed playback. When using vari-speed, I usually tend to capture an incoming signal and perform with that sample. However, in Repetition-studies, I use pre-made samples—labelled longer sounds in the patch—serve to ground the piece musically. In Repetition-study I, the sound which enters at approximately 00:24 is representative of the type of minimal gesturesI am interested in shaping further. Many of these longer-sounding fragments have been arranged using the sample lists in newcollide.maxpat. Fig. 2 shows the list1, list2, and list3 subpatches within the sounds subpatch; Fig. 3 shows an example of a sample list.

In Audio Example 1, I have captured a short example of performing with subtle layers, demonstrating my use of the patch. Furthermore, the musical role of separation emerges in my sonic material, where a contrasting sonic event is pitted against the opening soundscape; this role can be heard throughout Repetition-studies (a good example can be found in Repetition-study I at approximately 00:47). It has also been useful to consider the process of interrelation within the total listening range as mirroring a type of audiovisual interplay throughout Repetition-studies. Although that relationship is an extension of an aesthetic heard in many of my sound works, it is important to consider how the real-time visuals are situated. For example, what I am doing with the generative trees in order to form a cohesive result: I focus on audiovisual development as it relates to how I have managed sound material.

tree.maxpat

The idea here is to use Javascript in a Jitter patch in order to change the matrix, generating a tree which has a distinctive configuration of branches. As I became familiar with the ‘moving parts’ in the patch, I found there was potential for improvising a wide range of tree-forms. This seems obvious, but with continued use of the patch, I began to look not only at developing new trees, but how aspects of those forms could potentially be matched against a smaller, noise-based sonic range to form an interdependent audiovisual relationship.

Moving forward

As my series of short pieces grows, do I notice a stable relationship forming between sound and image?

Seth Rozanoff (1977, US) is an electronic musician living in Holland. Rozanoff enjoys using interactive multi-media software programs for his compositions, and when performing. Much of his recent work has been either audiovisual, fixed soundscapes, or instrument-plus-electronics.